Understand Concurrent Control: Balancing performance and Data integrity

Concurrent control protocol — how it handles data collisions

Today, cloud computing resources are widely available, allowing us to leverage multiple computing resources to accelerate data processing times and enhance performance. However, we often encounter a challenge known as data collision, which occurs when multiple processes attempt to write to the same data file simultaneously. This issue highlights the importance of implementing effective concurrent control mechanisms to ensure data integrity and prevent conflicts.

Most database management systems (DBMS), such as PostgreSQL and MySQL, include mechanisms to handle concurrency effectively. Lightweight OLAP databases like DuckDB also implement concurrency control. Additionally, tabular formats on block storage systems, such as Apache Iceberg and Delta Lake, support concurrency management. These systems utilize various techniques, including two-phase locking, multi-version concurrency control (MVCC), and timestamp ordering, to ensure data integrity and consistency during simultaneous operations by multiple users or processes.

Let’s Dive deeper into some common ways (or protocols) to handle Concurrency.

When dealing with conflicts, you have 2 options:

- You allow the conflict happened — But you need to detect the conflict upon committing your transactions. That’s what Optimistic Mechanism does.

- You can try to avoid the conflict. That’s what Pessimistic Mechanism does

I. Optimistic method

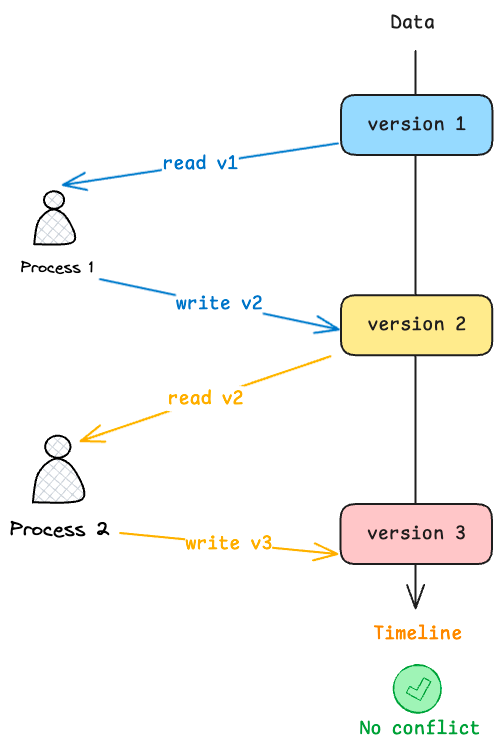

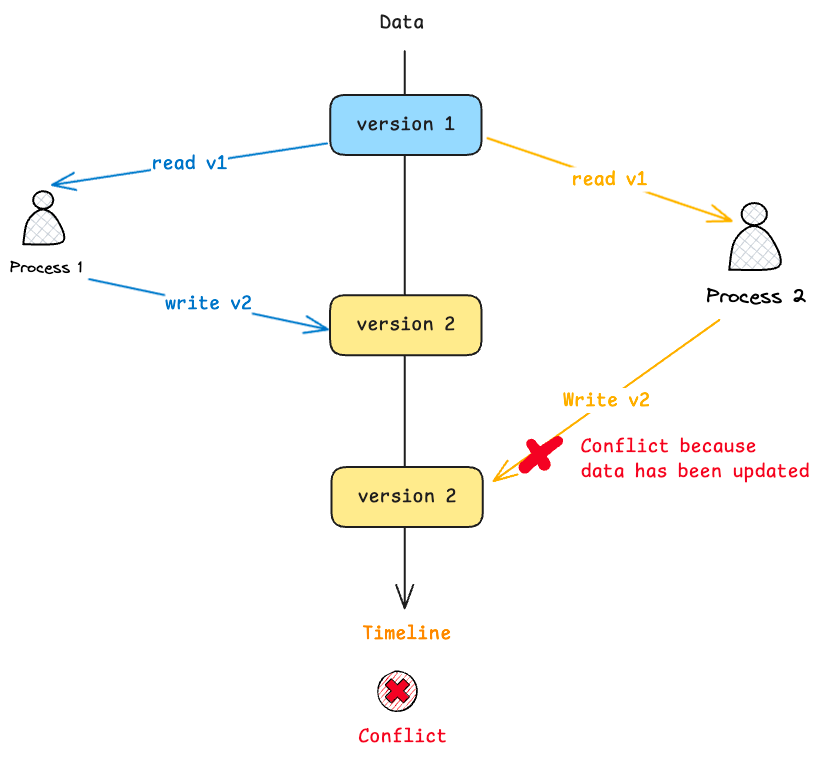

Optimistic Locking is a strategy where you read a record, take note of a version number (other methods to do this involve dates, timestamps or checksums/hashes) and check that the version hasn’t changed before you write the record back. When you write the record back you filter the update on the version to make sure it’s atomic. (i.e. hasn’t been updated between when you check the version and write the record to the disk) and update the version in one hit.

Optimistic locking (or known as Optimistic concurrency control) is generally used in case of low data contention. When conflicts are rare, transactions can complete without the expense of managing locks and without having transactions wait for other transactions’ locks to clear, leading to higher throughput than other concurrency control methods.

However, if contention for data is high, the cost of repeatedly restarting transaction hurts performance significantly (because we may need to re-compute the data — initial then commit again).

Benefits:

Optimistic Locking allows for high level of concurrency. This is particularly beneficial in real-heavy workload where transactions are less likely conflict. For example, Apache Iceberg and Delta Lake apply Optimistic locking when dealing with transactions to guarantee Atomicity but taking advantage of distributed computing from Cloud and Object storage.

II. Pessimistic method

Pessimistic Locking is when you lock the record for your exclusive use until you have finished with it. It has much better integrity than optimistic locking but requires you to be careful with your application design to avoid Deadlocks. To use pessimistic locking you need either a direct connection to the database (as would typically be the case in a two tier client server application) or an externally available transaction ID that can be used independently of the connection.

Pessimistic locking have some types of locks:

- Two-Phase Locking (2PL): This protocol involves two phases — growing (acquiring locks) and shrinking (releasing locks) — to prevent deadlocks and ensure data integrity.

- Shared and Exclusive Locks: Shared locks allow multiple transactions to read data, while exclusive locks permit one transaction to write, preventing others from accessing the data.

Different vendors can, and do, offer different levels of locks: database locks, table locks, row locks and even column locks in extreme cases. For locking at any level of granularity below the database, things get complicated: there must be either a mechanism to prevent deadlocks (aka race conditions), or a means to detect and remediate deadlocks. Invariably, this involves a separate process called a lock manager or lock arbiter.

Deadlocks, where two or more transactions are waiting indefinitely for each other to release locks. This necessitates additional mechanisms for deadlock detection and resolution.

Benefits:

Pessimistic concurrency control is most effective in environments where:

- High levels of transaction contention are expected.

- Data integrity must be strictly maintained, such as in financial applications or systems with critical data updates. (Optimistic do re-start when conflict happened)

In contrast, optimistic concurrency control may be more efficient in scenarios where conflicts are rare, allowing for greater concurrency without the overhead of locking mechanisms.

End?

The choice between pessimistic and optimistic concurrency control methods depends on the specific environment and workload characteristics. Pessimistic control is suited for high-conflict scenarios, ensuring that transactions do not interfere with each other, while optimistic control can enhance performance in low-conflict environments by allowing greater transaction throughput.

Happy reading and Hope you find some useful from my post.